The field of Natural Language Processing (NLP) has achieved significant advancements in the ability of machines to process and generate human language. One of the fundamental architectures central to these advancements is the Encoder-Decoder, or alternatively named Sequence-to-Sequence (Seq2Seq), model. This architecture, widely used in tasks such as Machine Translation and Text Summarization, is based on the principle of encoding an input sequence into a fixed-size representation and generating an output sequence from this representation. However, this approach introduces a bottleneck problem, which can lead to information loss, especially with long sequences. One effective solution developed for this problem is the Attention mechanism.

In this article, the fundamental components of the Encoder-Decoder architecture, the bottleneck problem it faces, and the solution provided by the Attention mechanism will be examined in detail.

The Encoder-Decoder Architecture: Structural Components

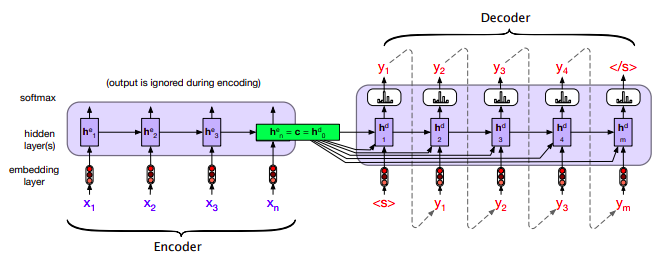

Encoder-Decoder architecture with Context vector

Encoder-Decoder models typically consist of two main modules, often implemented using RNN (Recurrent Neural Network)-based structures (e.g., LSTM or GRU):

- Encoder:

- Function: Processes the input sequence (e.g., a sentence in the source language) sequentially.

- Operation: At each time step, it computes a new hidden state using the current input element and the hidden state from the previous time step. This process continues until the entire input sequence is processed.

- Output: Produces a fixed-size vector, usually the final hidden state, containing a holistic representation of the input sequence. This vector is called the Context Vector.

- Context Vector ('c'):

- Function: Carries the semantic content or summary of the input sequence processed by the Encoder. It facilitates information transfer between the Encoder and Decoder modules.

- Nature: It has a fixed size and undertakes the task of condensing all input information into this single vector.

- Decoder:

- Function: Generates the target sequence (e.g., the translation in the target language) sequentially, using the Context Vector as initial information.

- Operation: It is typically initiated with a special start token (like ) and the Context Vector. At each time step, it predicts the next element by taking the element generated in the previous step and its own hidden state as input. This autoregressive generation process continues until a special end token (like ) is reached or a predefined maximum sequence length is attained.

This structure provides a suitable framework for situations where the input and output sequences can have different lengths, and the mapping between them is not direct.

The Information Bottleneck Problem

The primary limitation of the basic Encoder-Decoder architecture arises from the necessity of compressing the entire input sequence information into a single, fixed-size Context Vector. As the length of the input sequence increases, the risk of losing information, particularly details from the beginning of the sequence, during this compression process grows. Since the Decoder generates the output based solely on this Context Vector, it may struggle to capture local or early details in long sequences. This situation is referred to in the literature as the information bottleneck.

One of the initial approaches to mitigate this problem involved using the Context Vector as an additional input at every time step of the Decoder. While this modification helps maintain context information throughout the decoding process, it does not fully resolve the fundamental bottleneck issue, as the Context Vector still represents a fixed-size summary of the entire input.

The Attention Mechanism: Dynamic Context Selection

The Attention mechanism offers an effective solution to the bottleneck problem. Its core principle allows the Decoder, at each output step, to access not only the fixed Context Vector but also all the hidden states produced by the Encoder. It dynamically decides which parts of the input sequence are most relevant for generating the current output element.

This mechanism enables the Decoder to focus its "attention" on different parts of the input sequence, thereby selecting the most appropriate context information for the specific output element being generated.

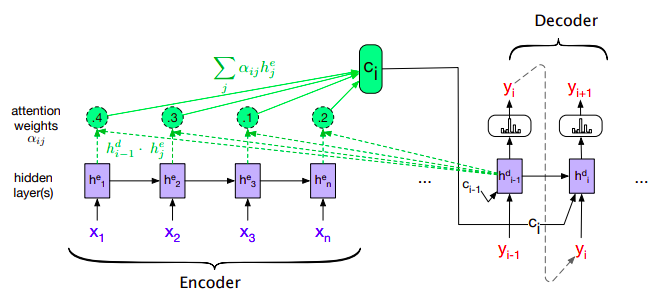

Encoder-Decoder architecture and Attention Mechanism

How the Attention Mechanism Works (Dot-Product Attention Example)

Attention calculates a dynamic Context Vector () for each Decoder time step (). This process typically involves the following steps:

- Scoring (Alignment Scores):

- The relationship or alignment between the Decoder's hidden state from the previous time step () and each of the Encoder's hidden states (, where is the index of the input element) is measured using a score function.

- A common and simple method is the dot-product: .

- These scores indicate which Encoder states are more important for the current Decoder state.

- Weight Calculation (Softmax):

- The calculated alignment scores are normalized into attention weights () using a softmax function.

- (Softmax is applied over all scores).

- These weights fall within the [0, 1] range and sum to 1. Each value forms a distribution indicating how much "attention" should be paid to the -th input element (or its representation) when generating the -th output element.

- Dynamic Context Vector Calculation (Weighted Sum):

- The Encoder's hidden states () are summed, weighted by their corresponding attention weights ().

-

- The resulting vector is the dynamically computed Context Vector for the -th Decoder step, focused on the most relevant parts of the input sequence. Encoder states with high attention weights contribute more significantly to the formation of .

- Output Generation (Decoding):

- The calculated dynamic Context Vector () is combined with the Decoder's previous output () and previous hidden state () to compute the current hidden state (): .

- This new hidden state () is then used to predict the current time step's output element (usually via a softmax layer).

Advantages of the Attention Mechanism

- Alleviation of the Bottleneck Problem: Reduces information loss by removing the constraint of compressing information into a single fixed-size vector.

- Performance Improvement: Enhances the performance of models, especially those operating on long sequences, by providing access to any part of the input sequence as needed.

- Interpretability: The Attention weights () can be visualized and interpreted to understand which parts of the input the model focuses on while generating the output. This offers insights into the model's decision-making process.

- Flexibility: In addition to dot-product, more sophisticated scoring functions involving learnable parameters can be used (e.g., Bilinear/General Attention: ). This increases the model's capacity to adapt to different datasets and tasks.

Conclusion

The Encoder-Decoder architecture has served as a foundational building block for Sequence-to-Sequence tasks. However, the bottleneck problem associated with the fixed Context Vector limited its performance. The Attention mechanism effectively overcame this limitation by enabling the Decoder to dynamically focus on the input sequence. This innovation led to significant performance improvements in many NLP applications, particularly machine translation, and paved the way for the development of more modern and powerful architectures like the Transformer. Attention has become an integral component of today's state-of-the-art NLP models.